Garment4D:

Garment Reconstruction from Point Cloud Sequences

- Fangzhou Hong1

- Liang Pan1

- Zhongang Cai1,2,3

- Ziwei Liu1✉

- 1S-Lab, Nanyang Technological University

- 2SenseTime Research

- 3Shanghai AI Laboratory

NeurIPS 2021 (Poster)

Abstract

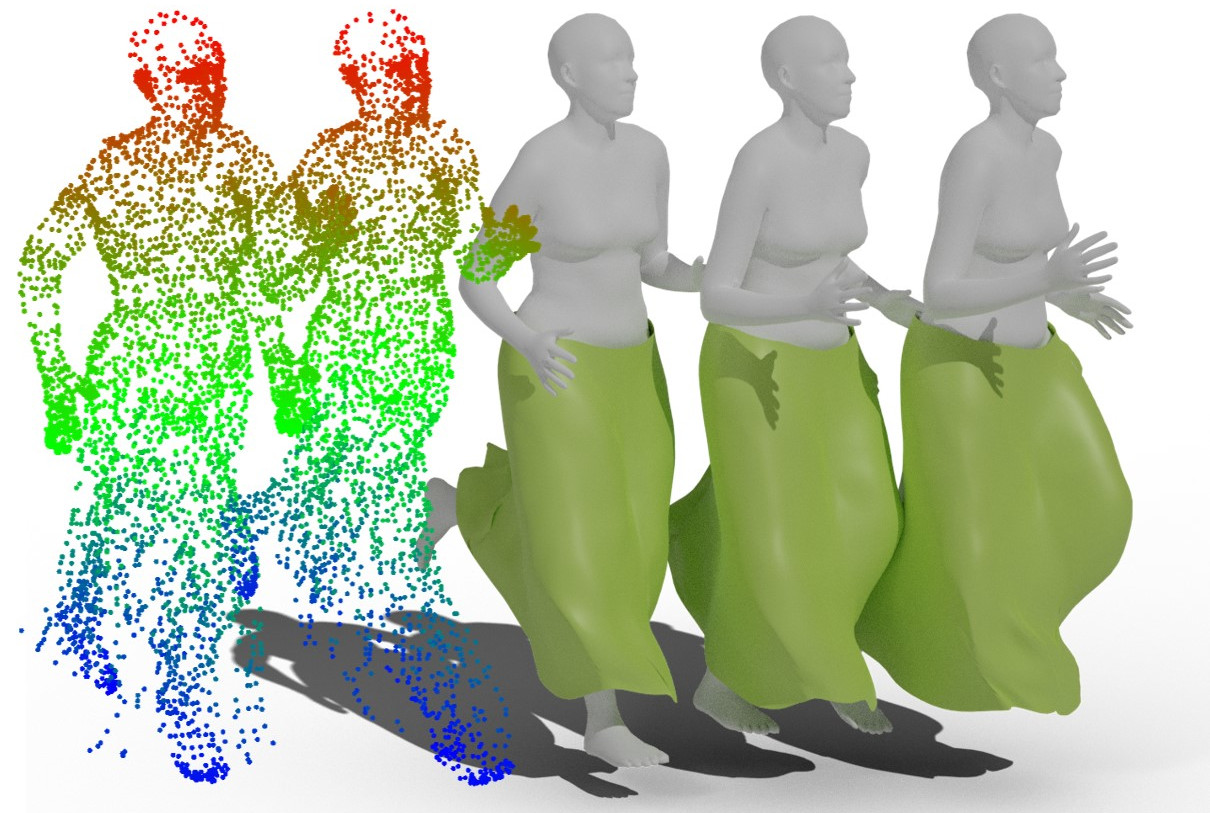

Learning to reconstruct 3D garments is important for dressing 3D human bodies of different shapes in different poses. Previous works typically rely on 2D images as input, which however suffer from the scale and pose ambiguities. To circumvent the problems caused by 2D images, we propose a principled framework, Garment4D, that uses 3D point cloud sequences of dressed humans for garment reconstruction. Garment4D has three dedicated steps: sequential garments registration, canonical garment estimation, and posed garment reconstruction. The main challenges are two-fold: 1) effective 3D feature learning for fine details, and 2) capture of garment dynamics caused by the interaction between garments and the human body, especially for loose garments like skirts. To unravel these problems, we introduce a novel Proposal-Guided Hierarchical Feature Network and Iterative Graph Convolution Network, which integrate both high-level semantic features and low-level geometric features for fine details reconstruction. Furthermore, we propose a Temporal Transformer for smooth garment motions capture. Unlike non-parametric methods, the reconstructed garment meshes by our method are separable from the human body and have strong interpretability, which is desirable for downstream tasks. As the first attempt at this task, high-quality reconstruction results are qualitatively and quantitatively illustrated through extensive experiments.

Video

Results

Bibtex

@inproceedings{

hong2021garmentd,

title={Garment4D: Garment Reconstruction from Point Cloud Sequences},

author={Fangzhou Hong and Liang Pan and Zhongang Cai and Ziwei Liu},

booktitle={Thirty-Fifth Conference on Neural Information Processing Systems},

year={2021},

url={https://openreview.net/forum?id=aF60hOEwHP}

}